Quine Enterprise Cluster Resilience

Quine Enterprise is built from the ground up to run in a distributed fashion across a cluster of machines communicating over the network. Unreliable network communication can make this an extremely difficult challenge. This page describes the clustering model and how it provides resilience in a complex deployment environment.

Cluster Topology and Member Identity

The cluster topology is defined at startup. The cluster has a fixed operational size specified by a config setting. An operating cluster will have this many members participating in the overall graph. Each member is assigned a unique integer defining its position in the cluster. Once the cluster has filled all positions with live members, the cluster enters an Operating state. Additional members that join the cluster are used as “hot-spares” to take over from any failed member of the cluster.

Member Identity

Cluster members are defined by both their position in the cluster and the host address/port they use to join the cluster. The position is a property of the cluster, while host address and port are properties of the joining member.

The position is an abstract identifier denoting a particular portion of the graph to serve. It has a value from 0 until quine.cluster.target-size of the cluster (originally set in the config at startup). The position is a logical identifier that is an attribute of the cluster and not directly tied capabilities or properties any member who might occupy that position. When operating, a member will fill exactly one position in the cluster. If that member leaves the cluster, the position will remain as empty or get filled by a new member.

Each member joins the cluster with a specific host address and port determined statically or dynamically when the member starts. These are properties of the joining members, and must be unique among all participants trying to join the cluster. A new member joining with the same address and port is treated as replacing the previous member with the same address/port. If this occurs, the original member is ejected from the cluster and the new member is added. A member’s address and port are specified in the config settings: quine.cluster.this-member.address and quine.cluster.this-member.port

The host address and port can be specified literally, or they can be determined dynamically at startup. The address value will be determined dynamically if either of the following values are provided:

<getHostAddress>will use the host IP found at runtime<getHostName>will use the host DNS name found at runtime

The port can be set to 0, which will assign it automatically at startup to a port available on that host.

Cluster Seeds

Cluster seeds facilitate any new machine trying to join the cluster. A seed is defined as the host address and port of a specific member. To form a cluster, at least one seed in the joining member’s configuration much be reachable over the network, and that seed must be part of the cluster. It is recommended to include multiple seed nodes in each member’s configuration in case that member needs to join when one or more member (particular the seed node it specifies) is unreachable. This can be done by specifying a list in the config file, or at the command line in the following fashion, replacing the number in configuration key with the index of the item in the list being referred to:

quine.cluster.seeds.0.address=172.31.1.100

quine.cluster.seeds.0.port=25520

quine.cluster.seeds.1.address=172.31.1.101

quine.cluster.seeds.1.port=25520

A member can specify its own host address and port as the seed node. If no other seeds nodes are provided or are unreachable, that member will form a cluster on its own and be available to add other members as the cluster seed.

Cluster Formation

As members join the cluster, they are assigned a position. When all positions (determined by target-size) have been filled, the cluster will transition into an operating state. A cluster in an operating state is fully available and is ready to be used.

Cluster Life-cycle

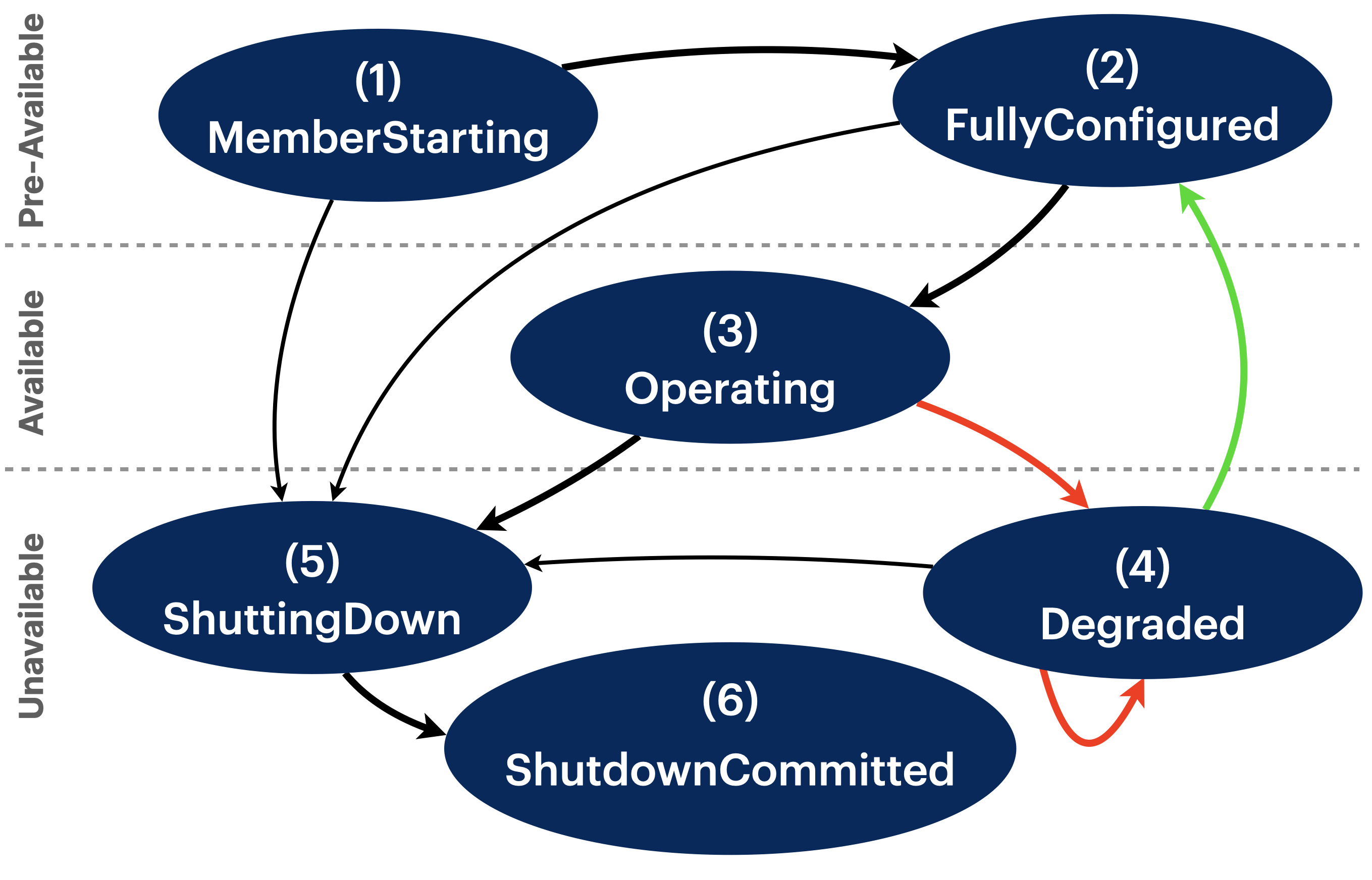

A cluster has six phases to its life-cycle:

MemberStarting- a member is starting. It may or may not have found the cluster yet.FullyConfigured- the cluster has filled each position required to operate the cluster.Operating- the cluster is fully operational.Degraded- a previously operating cluster has at least one position unfilled and the cluster cannot operate until the empty position is filled.ShuttingDown- shutdown has been requested and the member is preparing to terminate.ShutdownCommitted- shutdown preparation is complete and the cluster member will shut down.

In general, these phases progress linearly. Exceptions to this linear progression include the possibility that a cluster can transition:

- from

OperatingtoDegradedif a positioned member is lost - from

DegradedtoFullyConfiguredwhen an empty position has been filled and the number of members reaches thetarget-size - from

Degradedback toDegradedwhen the number of positioned members changes (up or down) but is still below thetarget-size - from phases 1-4 to

ShuttingDown

Hot-spares

Any members that join the cluster beyond the required target-size will join the cluster as hot-spares. A hot-spare is a member who serves no part of the graph but is available to take over in case any member goes down.

If a member serving part of the graph goes down, a hot-spare replaces it in the vacated position. If that member rejoins the operating cluster, it will be assigned as a role as hot-spare. Hot-spares function as seed nodes just as any other cluster member.

Handling Failure

Failure handling is entirely automatic in Quine Enterprise. Internal processes use distributed leader election, gossip protocols, failure detection, and other distributed systems techniques to detect server failure and decide on the best course of action.

Failure Detection

Detecting failed machines in the context of distributed systems is a fundamental challenge. It is impossible to determine on one system whether another system has failed, or whether it is just slow to respond—maybe because it is working hard. This fundamental logical constraint requires every distributed system to use time to make a judgement call about the health of other systems.

Member Failure Detector

Quine Enterprise uses Phi-Accrual Failure Detection to monitor the health of all cluster members. When a cluster member is unresponsive, it results in an exponentially growing confidence that the unresponsive member should be interpreted as failed. When the confidence is high enough, the decision is made by the clusters elected leader to treat the unresponsive member as unreachable. An unreachable member is given a grace period (primarily based on the cluster composition) before it is ejected from the cluster.

When an active member is ejected, the cluster enters a degraded state. From that point on, if the machine resumes activity, it will not be allowed to participate in the cluster. When a Quine Enterprise cluster member experiences this, it will shut itself down. If the application is started again on the same host and port, it will be able to re-join the cluster. It may find that its previous position is still open and then fill slot again after restarting, or if a hot-spare was available, it will find that its position was quickly filled, and the restarted machine will become a hot-spare.

Cluster Partition Detection

Networks are inherently unreliable. A cluster communicating across a network faces the logical danger that a partition in the network can result in a division of the cluster where each side believes it is the surviving half. To overcome this challenge, Quine Enterprise uses Pekko’s Split Brain Resolver to detect this situation and determine which cluster partition should survive.

Automatic Failure Recovery

With Quine Enterprise able to detect failed members, restore them quickly from hot-spares, and protect against cluster partitions, the operational demands on the people who manage it are greatly reduced. A Quine Enterprise cluster will remain operating as long as there are enough active members to meet or exceed the target-size. Any number of hot-spares can be added to the cluster to achieve any level of resilience the operator desires.

Inspecting the Live Cluster

The current status and recent history of the cluster is available for human or programmatic inspection from the administration status endpoint: GET /api/v1/admin/status. See the built-in documentation or reference section of the documentation for more details.